...

If you register, you will be given a piece of paper with your login information. The information on the paper should contain:

Credentials EXAMPLE Login name aws-lsstXX Password Oh19-EXAMPLE AWS Access Key ID AKIAIOSFODNN7-EXAMPLE AWS Secret Access Key wJalrXUtnFEMI/K7MDENG/bxp-Fake-EXAMPLEKEY You need the Login name and Password in the next step, will use the AWS SSH Keys later.

- Navigate, in your internet browser of choice, to https://sanpadhi.signin.aws.amazon.com/console and sign in. If you have a password manager, it may try to auto-fill your personal Amazon credentials, but we want to use login credentials specific to today's tutorial.

- Leave the "Account ID or Alias" unchanged (it should read "sanpadhi" who is kindly facilitating this tutorial).

- Type your unique Login name in the "IAM user name" field.

- Type the shared Password in the "Password" box.

- Leave the "Account ID or Alias" unchanged (it should read "sanpadhi" who is kindly facilitating this tutorial).

- You will be redirected to the AWS Dashboard. We'll be using the EC2 (Elastic Compute) service to create a kind of virtual server, called an instance.

- Click on the "Services" tab at the top, next to the AWS logo.

- Type "EC2" in the search bar, and select EC2 by clicking on it.

- You will be redirected to the Elastic Compute Dashboard.

- On the left hand side there is a panel with options. Under the "Image" section, select "AMIs."

- On the left hand side there is a panel with options. Under the "Image" section, select "AMIs."

- You will be redirected to the Amazon Machine Image selection page. We already have a "private" AMI (Amazon Machine Image) ready to go for this demo. The default AMIs shown on this page are ones you created and own, so you won't see any at first.

- Click on the drop-down triangle and select "Private Images."

- Find the AMI named "demo_master" (ami-07d6c4c78c1530ff8) and click on it to select it. It has the friendly suggestion "Launch me!" as a name.

- Click the blue "Launch" button.

- Click on the drop-down triangle and select "Private Images."

- You will be redirected to the Launch Instance Wizard. The Wizard allows you to configure a variety of things about your instance. You will see many different instance types, and a "micro" free-tier option may be pre-selected.

- Scroll way down and select m5.xlarge for this demo. This instance has 4 CPUs and 16 GB RAM. You could select a different one if you prefer; the specs are different so the performance varies.

- On the top of the Launch Instance Wizard page, in blue numbered text, you can see various different machine configurations you can select. Feel free to browse through them; most of the defaults are OK.

- We need to adjust the security settings before launching. Click on "6. Configure Security Group," choose "Select an existing security group," and select the "lsst-demo" (sg-058badbfff072b4ae) security group.

- Click the big blue "Review and Launch"!

- You will be redirected to Wizard Review page. It will warn you "Your instance is not eligible for the free usage tier!" and "Improve your instances' security. Your security group, lsst-demo, is open to the world." This is fine.

- Do a quick check of your setup before launching your instance. Ensure you have selected the "lsst-demo" security group! If not, you can edit it from here.

- Click the big blue "Launch" button in the bottom right of the screen!

- A pop-up window will appear. This window allows you to configure the SSH keys you want to use to connect to the instance.

- Since you are using this account for the first time select "Create a new key pair".

- Enter a Key pair name. We recommend you use your name; for example "Firstname-demo."

- Click "Download Key Pair" to download a <your name>.pem file.

Store this .pem file somewhere safe and easy to remember. If you are running a Linux machine we recommend the `~/.ssh` directory. Most SSH clients will not allow you to use SSH key files if their file permissions are too permissive, so update the permissions to be user-read-only.

Code Block mv ~/Downloads/Firstname-demo.pem ~/.ssh/. chmod 400 ~/.ssh/Firstname-demo.pem

- You are now ready to click in the big blue "Launch Instances" button in the bottom right of the pop-up window.

- Since you are using this account for the first time select "Create a new key pair".

- Your instance is now launching! Click on the ID of your instance and wait for the "Instance State" to turn green.

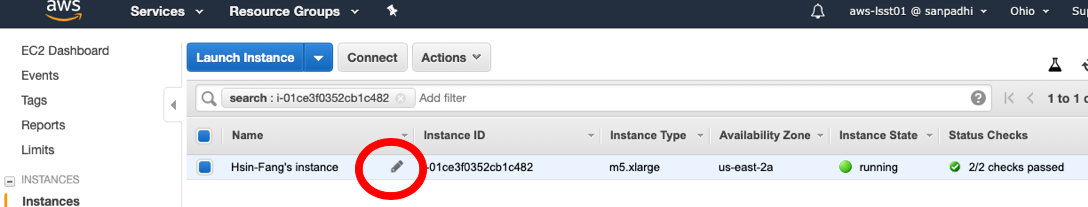

- (OPTIONAL) If you want, you can give your instance a name, just so it's easier to find it later after you navigate away. To add a name, hover your mouse on top of the name box, which is blank by default. Click on the pencil to add a name. Please be careful only do so to your instance, not somebody else's.

- (OPTIONAL) If you want, you can give your instance a name, just so it's easier to find it later after you navigate away. To add a name, hover your mouse on top of the name box, which is blank by default. Click on the pencil to add a name. Please be careful only do so to your instance, not somebody else's.

Congratulations, your instance is now up and running! Click "Connect" for instructions on how to connect to it.

...

ssh to your instance from a Terminal window on your computer.

You may click on your instance in the console and click "Connect" to get an example ssh command.

The example ssh command has `root` as the login user. You must replace `root` with `centos`, adjust the path to your key file, and copy the specific instance address from the example window.

Code Block language bash ssh -i "~/.ssh/Firstname-demo.pem" centos@EXAMPLE-12345-EXAMPLE.amazonaws.com

It can take a while to log in. Don't worry, this is normal.

When ssh asks, are you sure you want to continue connecting? Type "yes".

Once you are connected, run this script to set up your credentials

Code Block language bash source setUpCredentials.sh

You will be prompted to type in the AWS Access Key ID and the AWS Secret Access Key from your paper. If you are ever disconnected and have to re-ssh into the instance, you will need to run this setup again.

You are now ready to process data.

LSST Science Pipelines data processing

In this part of the tutorial we demonstrate two things:

- Using LSST Science Pipelines software run on the S3 and RDS to process some images using Amazon's S3 (Simple Storage Service) and RDS (Relational Database Service) services.

- Scaling the data processing in the cloud using Pegasus and HTCondorwith the help of HTCondor, a workload management system.

In the first part, we want to demonstrate the native support for AWS that exists in the LSST Stack's codeScience Pipelines software. This is demonstrated by invoking an LSST Stack's processing command a command to process image data using the LSST Science Pipelines but placing all of its configurations and data in various the image data and configuration information in the cloud via AWS services.

In the second part, we want to demonstrate how to scale up the example a singular dataset processing step , shown in from the first part , to large datasets.

Running LSST

...

Science Pipelines software using data and configs in S3 and RDS

...

.

Goal: run an example instrument signature removal (ISR) task by invoking LSST Stack functionality directlyusing the LSST Science Pipelines.

The LSST "Data Butler" keeps track of the datasets in its "registry," which is a database. In the registry the middleware enforces The registry requires dataset uniqueness with by using the concept of a collection: for running the same processing step of the same input data multiple times, a different output collection needs to be usedspecified, or else you will get an "OutputExistsError." would be given.

Because we all of us share one same registry in for this tutorial session, and we are all run running the same pipeline processing with the same input, each of you, tutorial users, need tutorial user needs to target a different output collection to avoid conflicting with each other. Doing that effectively says to the Butler: conflicts. This tells the Data Butler, "I understand I am producing identical data that may already exist in some output collections, but I still want to produce it, so place it in a different collection."

To avoid conflicting with output collections of other users please use sufficiently random a unique output collection name.

We recommend prefixing your name or your account id to the name. It is convenient to use an environment variable for this, for exampleinstance:

| Code Block | ||

|---|---|---|

| ||

export OUT="aws-lsst01lsst-DinoMyName" # Change it!! |

To run the LSST pipeline task, copy and paste (or type in) the following command to the Terminal:

| Code Block | ||

|---|---|---|

| ||

pipetask -d 'visit.visit=903334 and detector.detector=22' \ -b "'s3://lsst-demo-pegasus/input/butler.yaml" ' \ -p lsst.ip.isr -i calib,shared/ci_hsc \ -o $OUT \ run \ -t isrTask.IsrTask:isr \ -i calib,shared/ci_hsc \ -o $OUT \ run \ -t isrTask.IsrTask:isr -C isr:/home/centos/configs/isr.py |

The pipetask command breakdown follows:

C isr:/home/centos/configs/isr.py |

Let's break down what this command is doing. For visual clarity, we've broken this single-line command into multiple lines with `\` continuation characters.

- `pipetask` is the command-line argument that says you want to run a "Pipeline Task" with the LSST Science Pipelines,

- the dataset ID (the `-d` dataset ID (the -d flag) selects the data that you want to process, which we specify here as a single "visit" and a single "detector" (this specifies a single CCD image),

the Butler configuration (the `-b b` flag) , targets a YAML file that configures (text file) that has configuration information about where the registry and datastore data live,

- the packages (the `-p p` flag) , that list lists all the packages the pipetask that will search be searched to find the targeted tasks we want to run,

- the input collections (the `-i i` flag) , which are the collections that contain all of the raw and calibration data needed for this process,

- the output collection (the `-o o` flag) , which is the collection where all your processed data will be placed inland,

- `run` specifies that we want to run the task (rather than e.g. just listing the steps) and the subsequent flags specify how the running will take place,

- the task (the `-t t` flag) , is the actual processing task that will execute, in this case the Instrument Signature Removal Task(ISR) task that lives in the package we already specified with `-p`,

- and the task configuration (the `-c c` flag) sets various processing parameters for the task

- LSST tasks are highly configurable,

- and we use a capital `-C` to specify a configuration file that lists all the desired configurations (e.g., convolution kernel size)

- for the task

After a short wait (it could be a few minutes), you should see logs coming out, like below:

...

If you are interested in seeing where your data is coming from and where is it going, you can download the Butler configuration used in this example and inspect it, by doing:

| Code Block | ||

|---|---|---|

| ||

aws s3 cp "s3://lsst-demo-pegasus/input/butler.yaml" . cat butler.yaml |

You will find that the configuration consists of a datastore and a registry. The Datastore datastore is the place where the files actually live and, in . In this demo, targets it points to an S3 Bucket called "lsst-demo-repo." . The Registry registry is a database that describes the datasets and their metadata, and in . In this demo, targets it points to a PostgreSQL RDS instance called demo-registry at port 5432 and in that RDS instance the database called "cihsc".

...

5432 and in that RDS instance the database called "cihsc". If you were running the processing on your local machine, these would just be standard paths to files on your computer instead of "s3" and "postgresql."

If you want to see the source code for the IsrTask, it is on GitHub here.

The following is OPTIONAL (but fun!). You may skip this and continue to run the workflow in

...

Section 3.2

...

.

As your ISR job finishes, the output image, a "postISRCCD", is written into the S3

...

output collection bucket.

...

You

...

can navigate to the S3 bucket to find the output fits image.

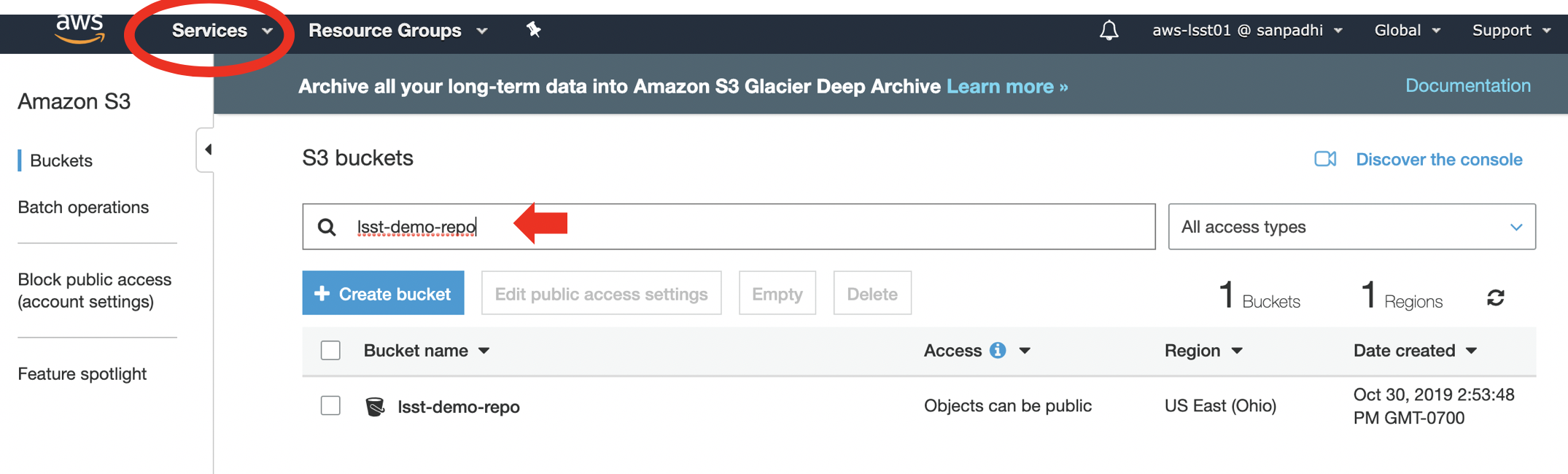

To view the S3 bucket in the AWS console, click on the "Services" tab on the upper left and type "S3" in the search bar. Select "S3" to go to the Amazon S3 page.

...

Then search for the "lsst-demo-repo" bucket.

Click on the "lsst-demo-repo" bucket on its name, and you will be brought to the overview of the bucket.

...

You will see many folder names and you can navigate around the folders like in a filesystem, but it is not! The Amazon S3 console supports a concept of folders, but there is no hierarchy

...

. S3

...

has a flat structure: you create a bucket, and the bucket stores objects.

You should find a folder named after your output collection ("aws-

...

lsst-

...

MyName" in the example above). This was created when you ran the ISR job. Click on your folder and continue clicking down, you will see

"postISRCCD" > 903334 > postISRCCD_903334_22_HSC_NUMBER.

...

fits" (Note this path includes the visit and detector numbers we specified earlier! The last number is distinct.)

You can download the fits file to your instance. For example,

| Code Block |

|---|

aws s3 cp s3://lsst-demo-repo/aws-lsst01lsst-Dino1MyName/postISRCCD/903334/postISRCCD_903334_22_HSC_17.fits . |

(Change the path as appropriate for your outputs.)

Programmatically, we use the Butler API to retrieve the file as a python object. First, launch python.

| Code Block | ||

|---|---|---|

| ||

python from lsst.daf.butler import Butler butler = Butler("/home/centos/butler.yaml", collection="aws-lsst01lsst-Dino1MyName") # Update for your collection name exp = butler.get("postISRCCD", {"visit":903334, "detector":22, "instrument":"HSC"}) |

Getting a "LookupError"? Are you SURE you changed your collection name in the above Python snippet?

Getting a "FileNotFoundError" on the butler.yaml file? Do "aws s3 cp s3://lsst-demo-pegasus/input/butler.yaml ~/butler.yaml" to download a new one or change the path to the location you store the file.

A demo workflow of LSST processing jobs

...

In this example, we use Pegasus* as the workflow manager to submit and monitor the jobs. Pegasus runs workflows on top of HTCondor. The job dependency is represented as a directed acyclic graph (DAG) and HTCondor controls the processes.

...

When you are done, please help us by terminating your instance. On-demand instances continue running until termination, and so do the $$ charges.

Go to the EC2 Dashboard. (If you navigated away to S3, you will have to get back here from the Services menu.) Select you your instance. Click , click "Action" and go to "Instance State", and select "Terminate".

Confirm "Yes, Terminate" if you are sure.

Please only do so if you are sure this is your instance. ! If you are not sure, just leave it running. We can do the cleanup afterwards.

Appendix: Find/Create Your Access Key ID and Secret Access Key

...