What is the scope of the "Science Pipelines" documentation site?

- Note that important obs packages are outside lsst_distrib; omitting the obs packages would reduce the current usability of the Pipelines documentation.

- Technical constraint: tightly coupled packages should be documented together since docs will be versioned tightly with the codebase (docs embedded in Git; also known as 'docs as code'). This is an LSST the Docs feature: https://sqr-006.lsst.io.

- We agreed that a lot of middleware (things beyond Princeton and UW) should be included in pipelines.lsst.io because of the tight API integration, including:

- task/supertask framework

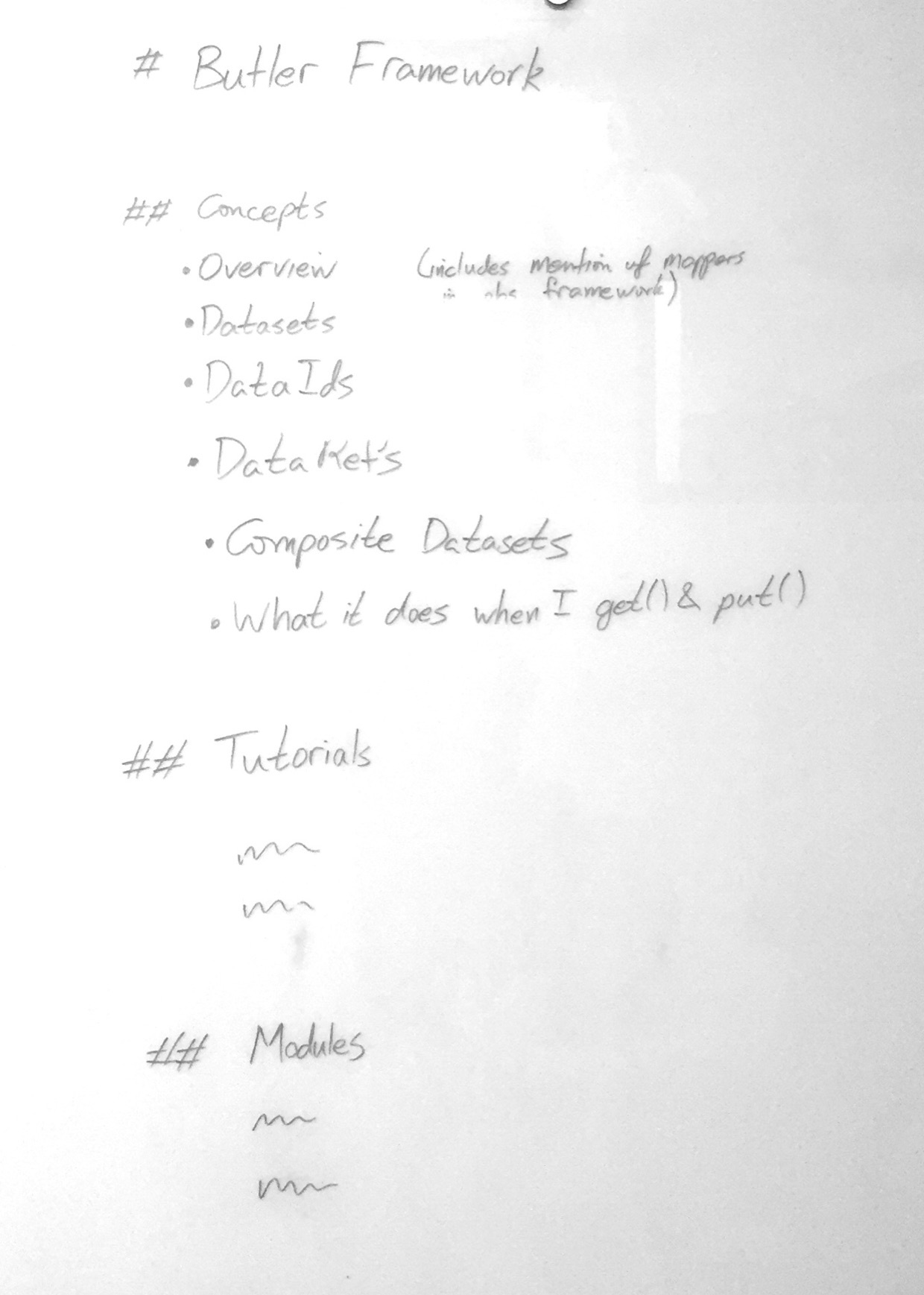

- butler

- logging

- display packages

- Example: document the Butler API in pipelines.lsst.io, but document the DAX service elsewhere.

- Butler is an API that has implementations for different backends.

- Document implementations to each backend.

- Doc how to write an implementation.

- Example: document the Firefly display package in pipelines.lsst.io, but document Firefly itself elsewhere.

- There is a list of obs packages that will be supported. These will be included in pipelines.lsst.io.

- lsst.validate packages will be in pipelines.lsst.io.

- Can all packages in the lsst Python namespace be thought of as pipelines.lsst.io (excluding simulations).? Is pipelines.lsst.io effectively the documentation for the "lsst" python package?

- Think of pipelines.lsst.io as documentation for the open source project that might be used in other contexts besides LSST AP and DRP pipelines (other observatories, building L3 data products). Data release documentation will specify exactly how the Science Pipelines were used to build a data release.

Boundary between Pipelines docs and the Developer Guide

Should the pipelines documentation cover developer and build-oriented topics currently in the DM Developer Guide? Do pipelines users need to be able to create Stack packages to make Level 3 data products?

- lsstsw and lsst-build

- Structure of Stack packages (including sconsUtils and EUPS details)

- etc?

- developer.lsst.io is intended to define policies and practices specific to DM staff. We can't use it as documentation to end users.

- If the build and packaging system are described in pipelines.lsst.io, it could be awkward for other software projects, like Qserv and Sims, that also depend on EUPS/sconsUtils/lsst-build/lsstsw, etc..

- However, putting build/packaging documentation in pipelines.lsst.io probably makes the most sense for astronomers extending the stack. pipelines.lsst.io is already where astronomers will look to learn how to write new packages against the Pipelines API. Overall, we can just learn that pipelines.lsst.io is where build and packaging is fundamentally documented.

- LDM-151 is where we're designing and planning the stack.

- Eventually it will grow to say what the Stack is.

- pipelines.lsst.io will also say what the Stack is.

- LDM-151 is change controlled: not continuously deployed like the Stack documentation.

- What if LDM-151 is kept as a record of the Stack used for reviews and related communities? And most users only use pipelines.lsst.io?

- This needs to be discussed by DM/TCT leadership.

- Existing proposal: https://ldm-493.lsst.io/v/v1/index.html#change-controlled-design-documents (suggests that content is transplanted and single sourced in design docs).

Who are our users?

- What user group should be prioritized?

- What are common activities that this group wants to achieve? What documentation will assist with that?

- Where do the needs of different groups overlap?

- DM developers in construction

- Need API references most.

- Currently learn APIs by introspection or reading the source and code that uses an API. Doxygen isn't useful.

- Descriptions of how tasks fit together (both API-wise, and higher-level concepts; even LDM-151-level).

- Examples to help us develop one package given lower level APIs.

- Run tasks for validating processing; run on verification clusters.

- DM is the biggest consumer of pipelines.lsst.io.

- Construction-era science collaborations (sims users?)

- Currently consumers of Sims (MAF).

- Many won't contribute to the pipelines stack.

- May want to give feedback. Need algorithmic descriptions.

- DESC

- Running real imaging data now with the stack

- Want to contribute feedback (knowledge). E.g. on algorithms.

- Want to contribute packages. E.g. twinkles.

- Want to implement a measurement algorithm and compare against the performance of factory algorithms.

- Need:

- developer docs (to support development)

- algorithm background (to comment on)

- how to run pipelines on their own infrastructure.

- LSST operators/scientists in operations

- DRP may want an internal ops guide (out of scope)

- Science directorate will have similar needs to DM developers now.

- 'DataSpace' users in operations

- SDSS experience: Small queries to subset data. Complex queries to get objects of interest. Use cut-out service to give context to catalogs.

- Will want to run tasks on a subset of image data. Customize our algorithms.

- Use Butler to get/put datasets within their storage quota

.

- Develop and test algorithms that may be proposed for incorporation in DRP.

- Other observatories/surveys

Summary

- DM developer needs generally match the needs of all other groups, possibly with the exception of some conceptual framing documentation. DM will be API oriented, whereas new users will need more conceptual docs.

- Need priorities, still.

EUPS Packages as units of organization

- It's natural to organize documentation (to some extent) according to units of EUPS packages, given that doc content should live with code. Should every EUPS package have a topic page and be linked from the homepage (like the astropy docs do for sub-packages)? Are there exceptions where documentation that may live in an EUPS package should actually be organized altogether independently of EUPS package structure?

- What should typical in-package documentation look like? See https://validate-drp.lsst.io as a prototype, and https://docs.astropy.org in general.

- To what extent should documentation refer to EUPS packages (e.g., afw) versus Python namespaces (lsst.afw)?

- Document at the level of the Python module. e.g. afw.image, afw.table, pipe.base, not necessarily at the Git repository level.

- Docs live inside packages and package docs can be built locally and independently of the full pipelines.lsst.io site.

- However, the pipelines.lsst.io homepage can arrange docs for modules into topical groupings.

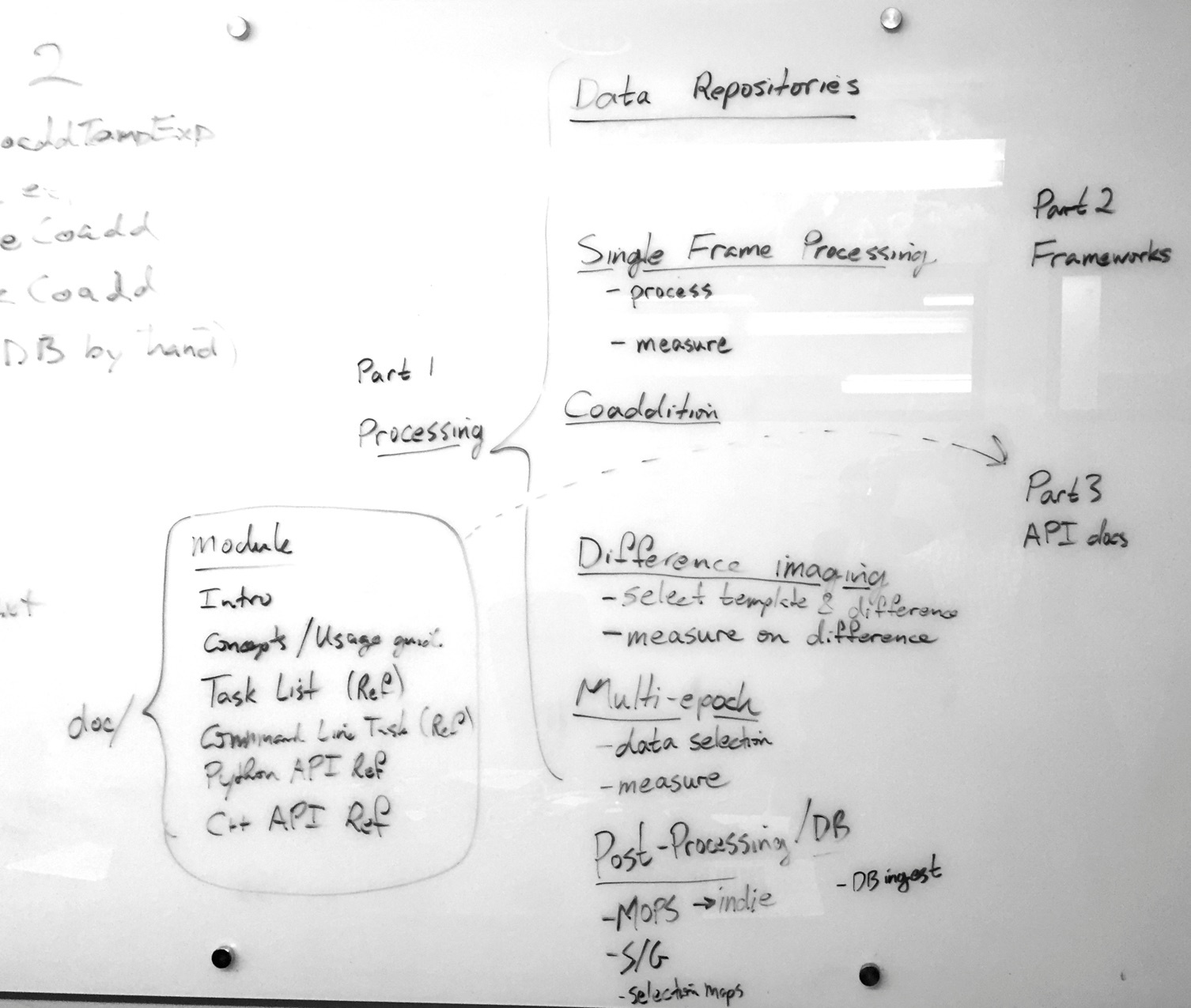

What is the structure of the documentation homepage?

- The homepage is important for orienting users. The structure of the homepage should present a coherent vision for what the Science Pipelines are and how they're used.

- See also https://community.lsst.org/t/pipelines-documentation-site-organization-sketch/1088/1 and LDM-151

Frameworks.

- obs

- meas.

- modelling.

- tasks.

- Butler/Data Access Framework.

- Data structures

- geometry

- display

- log

- debug

- validate

- Build system

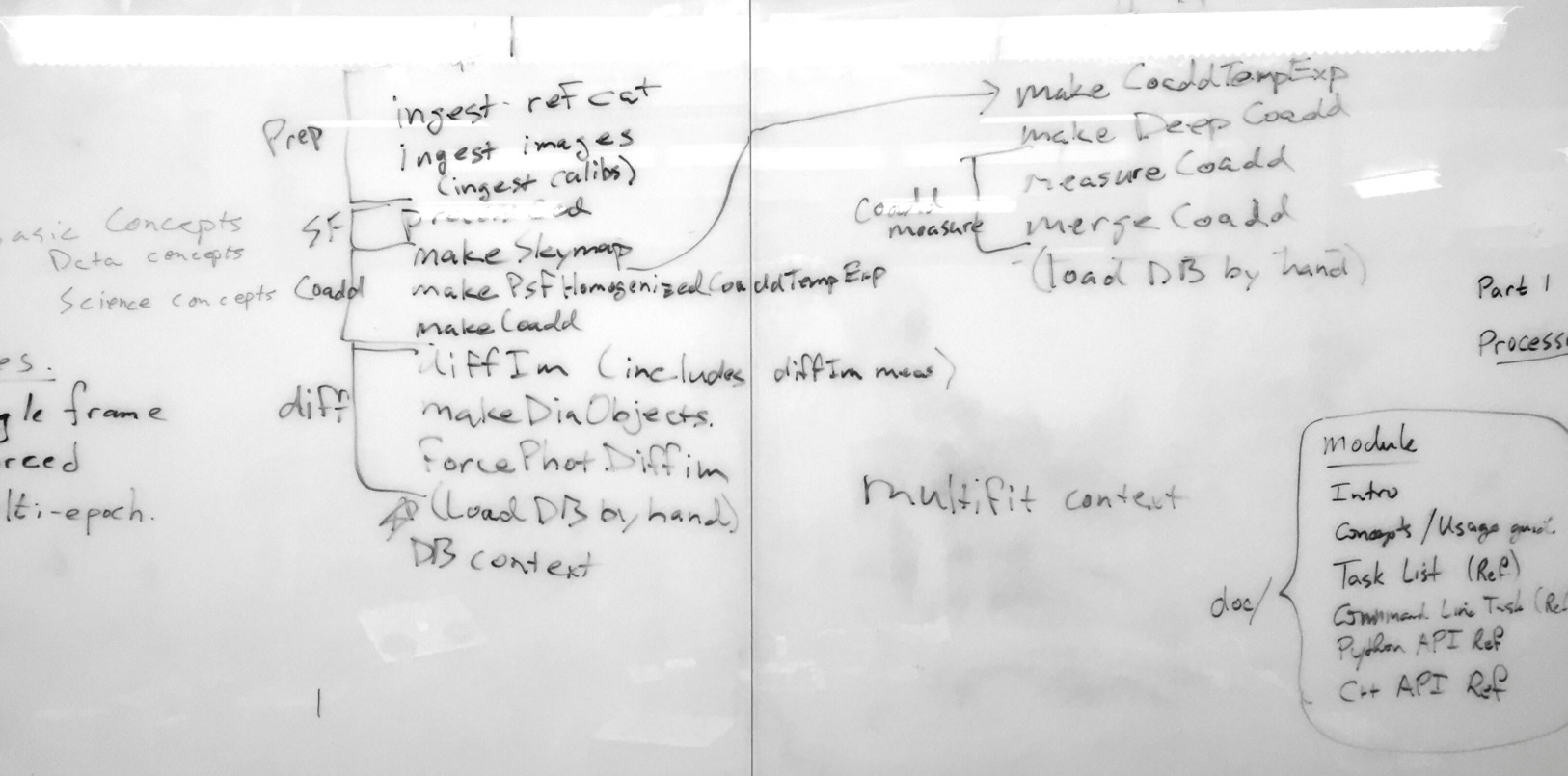

Twinkles workflow.

Homepage structure.

Where should concepts of science interest (such as algorithm details) be documented?

- Docstrings of code that implements algorithms?

- Tasks/Command line task interface references?

- Concept topics that then introduce task/API references?

- To what extent are LSST design documents (e.g., LDM-151) cross-linked and referenced?

- Algorithms don't match Python/C++ APIs 1:1. Indicates that algorithms should be described at a higher level.

- Tasks might be the best home for algorithm documentation.

- Need for higher-level overviews that describe "processing topics" that link to composed tasks.

How should examples and tutorials be produced?

- All tutorial and in-text examples need to be runnable.

- How do we leverage the example/ directories?

- Should documentation pages essentially be written as Jupyter notebooks?

We need additional prototyping and design discussion before we identify a pattern for producing and testing examples in documentation.

How should C++/Python API reference documentation be produced?

- There should be a small discussion between the pybind11 transition team and SQuaRE doc engineering to design and choose a system.

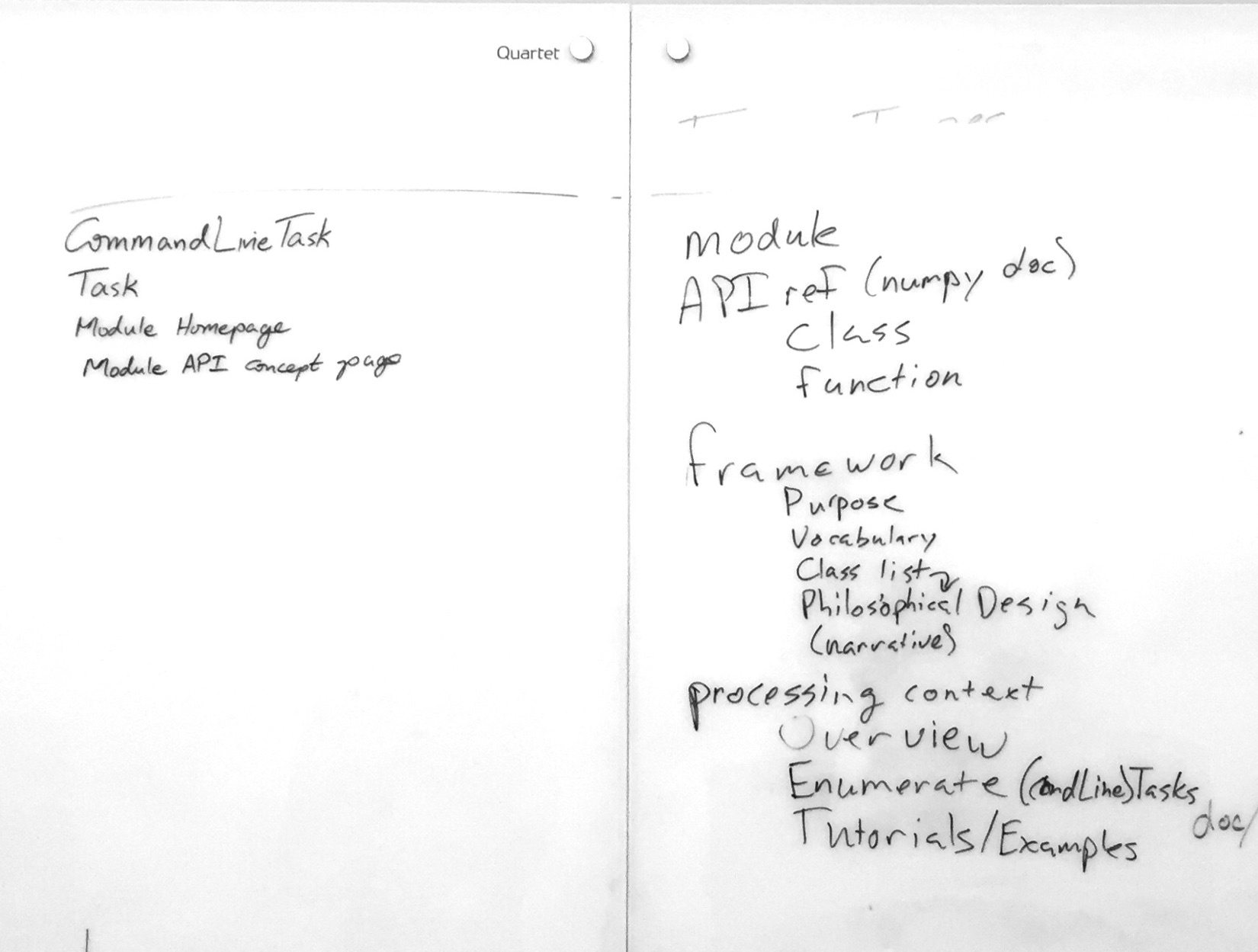

Listing topic types and templates

- What are all the distinct types of things we'll need to document?

- What should each type of content look like?

Preliminary listing.

Task topic type.

README topic type + GitHub summary line.

Measurement framework topic example

Butler framework topic example.

- Approach 1: use community.lsst.org as a draft for docs: see new content on the forum, write the docs, and then post a link to that doc in the original topic. This is a culture and process problem.

- Approach 2: auto-link to community.lsst.org topics from documentation pages. Can be done by looking for Community topics that link to the documentation site, and by looking for certain watch words that are embedded in the metadata of each reStructuredText page. DocEng will make this.

We'll have lots of lists of command line tasks in two places: module topic pages and in processing context sections of the home page.

- On the homepage we'll want to curate topical groups. Given the small number of command line entrypoints this can be maintained manually. Eventually we can add tag metadata to each task to support auto-generated lists

- On module pages the command line task list can be alphabetical.

Task framework documentation should document the philosophy of tasks vs command line tasks

- One stance is that command line tasks are aggregations of tasks. The tasks are what contains algorithms, and is where the algorithm should be documented.

- However we discussed that the difference may not be meaningful and that tasks and command line tasks should be documented together in a single topic type.

Important/interesting frameworks are the ones that span multiple modules

- Butler

- measurement

- tasks