Prerequisites: Sign up at the link https://lsst-processing-tutorial-aws.splashthat.com/ to obtain your AWS user login.

Slides: AWS-LSST-tutorial.pdf

Tutorial presenters: Hsin-Fang Chiang (AURA/LSST), Sanjay Padhi (AWS), Dino Bektesevic (University of Washington/LSST), Todd Miller (HTCondor/University of Wisconsin-Madison), Yusra AlSayyad (Princeton/LSST), Meredith Rawls (University of Washington/LSST) with the help of the full AWS-PoC team.

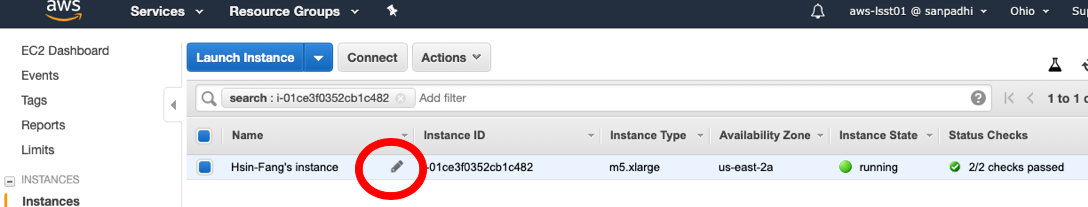

Goal: Launch an Amazon EC2 instance in the AWS console.

If you register, you will be given a piece of paper with your login information. The information on the paper should contain:

| Credentials | EXAMPLE |

|---|---|

| Login name | aws-lsstXX |

| Password | Oh19-EXAMPLE |

| AWS Access Key ID | AKIAIOSFODNN7-EXAMPLE |

| AWS Secret Access Key | wJalrXUtnFEMI/K7MDENG/bxp-Fake-EXAMPLEKEY |

You need the Login name and Password in the next step, will use the AWS Keys later.

Store this .pem file somewhere safe and easy to remember. If you are running a Linux machine we recommend the `~/.ssh` directory. Most SSH clients will not allow you to use SSH key files if their file permissions are too permissive, so update the permissions to be user-read-only.

mv ~/Downloads/Firstname-demo.pem ~/.ssh/. chmod 400 ~/.ssh/Firstname-demo.pem |

Congratulations, your instance is now up and running! Click "Connect" for instructions on how to connect to it.

Goal: connect to your instance using SSH credentials.

Good job launching your first AWS EC2 instance! Now it's time to connect to your instance so we can get the LSST Science Pipelines software set up.

ssh to your instance from a Terminal window on your computer.

You may click on your instance in the console and click "Connect" to get an example ssh command.

The example ssh command has `root` as the login user. You must replace `root` with `centos`, adjust the path to your key file, and copy the specific instance address from the example window.

ssh -i "~/.ssh/Firstname-demo.pem" centos@EXAMPLE-12345-EXAMPLE.amazonaws.com |

It can take a while to log in. Don't worry, this is normal.

When ssh asks, are you sure you want to continue connecting? Type "yes".

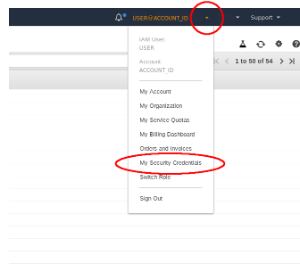

Once you are connected, run this script to set up your credentials

source setUpCredentials.sh |

You will be prompted to type in the AWS Access Key ID and the AWS Secret Access Key from your paper. If you are ever disconnected and have to re-ssh into the instance, you will need to run this setup again.

You are now ready to process data.

In this part of the tutorial we demonstrate two things:

In the first part, we want to demonstrate the native support for AWS that exists in the LSST Science Pipelines software. This is demonstrated by invoking a command to process image data using the LSST Science Pipelines but placing all of the image data and configuration information in the cloud via AWS services.

In the second part, we want to demonstrate how to scale up a singular dataset processing step from the first part to large datasets.

Goal: run an example instrument signature removal (ISR) task using the LSST Science Pipelines.

The LSST "Data Butler" keeps track of the datasets in its "registry," which is a database. The registry requires dataset uniqueness by using the concept of a collection: for running the same processing step of the same input data multiple times, a different output collection needs to be specified, or else you will get an "OutputExistsError."

Because we all share one registry for this tutorial session, and we are all running the same pipeline processing with the same input, each tutorial user needs to target a different output collection to avoid conflicts. This tells the Data Butler, "I understand I am producing identical data that may already exist in some output collections, but I still want to produce it, so place it in a different collection."

To avoid conflicting with output collections of other users please use a unique output collection name. We recommend prefixing your name or your account id to the name. It is convenient to use an environment variable for this, for instance:

export OUT="aws-lsstXX-MyName" # Change it!! |

To run the LSST pipeline task, copy and paste (or type in) the following command to the Terminal:

pipetask -d 'visit.visit=903334 and detector.detector=22' \

-b 's3://lsst-demo-pegasus/input/butler.yaml' \

-p lsst.ip.isr \

-i calib,shared/ci_hsc \

-o $OUT \

run \

-t isrTask.IsrTask:isr \

-C isr:/home/centos/configs/isr.py |

Let's break down what this command is doing. For visual clarity, we've broken this single-line command into multiple lines with `\` continuation characters.

the Butler configuration (the `-b` flag) targets a YAML file (text file) that has configuration information about where the registry and data live,

After a short wait (it could be a few minutes), you should see logs coming out, like below:

If you are interested in seeing where your data is coming from and where is it going, you can download the Butler configuration used in this example and inspect it:

aws s3 cp "s3://lsst-demo-pegasus/input/butler.yaml" . cat butler.yaml |

You will find that the configuration consists of a datastore and a registry. The datastore is the place where the files actually live. In this demo, it points to an S3 Bucket called "lsst-demo-repo." The registry is a database that describes the datasets and their metadata. In this demo, it points to a PostgreSQL RDS instance called demo-registry at port 5432 and in that RDS instance the database called "cihsc". If you were running the processing on your local machine, these would just be standard paths to files on your computer instead of "s3" and "postgresql."

If you want to see the source code for the IsrTask, it is on GitHub here.

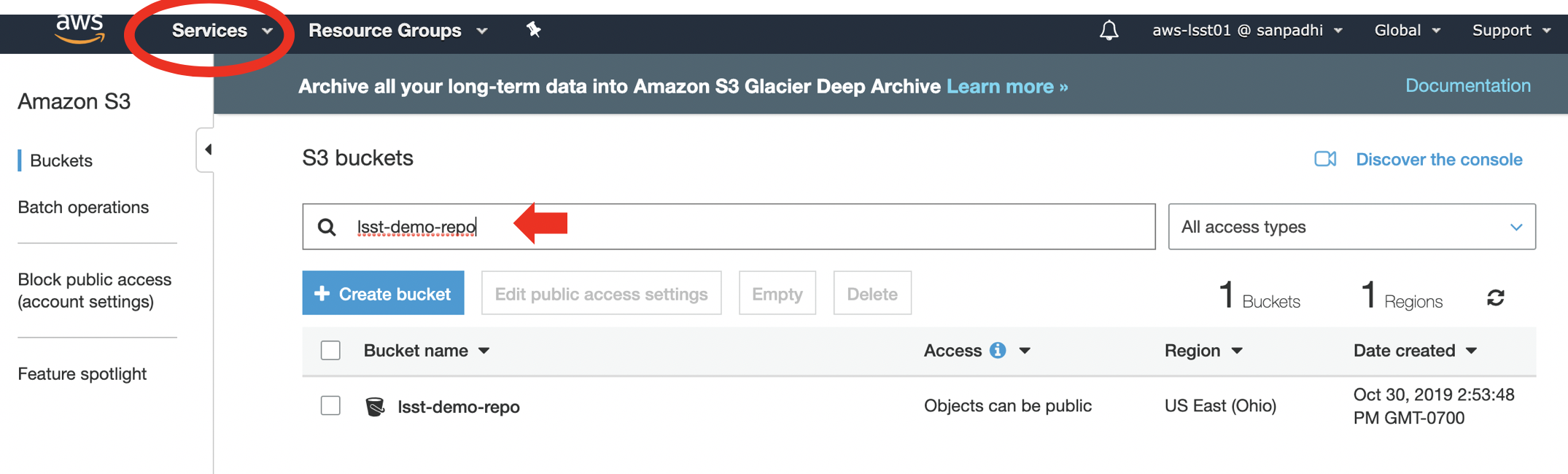

As your ISR job finishes, the output image, a "postISRCCD", is written into the S3 output collection bucket. You can navigate to the S3 bucket to find the output fits image.

To view the S3 bucket in the AWS console, click on the "Services" tab on the upper left and type "S3" in the search bar. Select "S3" to go to the Amazon S3 page. Then search for the "lsst-demo-repo" bucket.

Click on the "lsst-demo-repo" bucket on its name, and you will be brought to the overview of the bucket. You will see many folder names and you can navigate around the folders like in a filesystem, but it is not! The Amazon S3 console supports a concept of folders, but there is no hierarchy. S3 has a flat structure: you create a bucket, and the bucket stores objects.

You should find a folder named after your output collection ("aws-lsstXX-MyName" in the example above). This was created when you ran the ISR job. Click on your folder and continue clicking down, you will see

"postISRCCD" > 903334 > postISRCCD_903334_22_HSC_NUMBER.fits" (Note this path includes the visit and detector numbers we specified earlier! The last number is distinct.)

You can download the fits file to your instance. For example,

aws s3 cp s3://lsst-demo-repo/aws-lsstXX-MyName/postISRCCD/903334/postISRCCD_903334_22_HSC_NUMBER.fits . |

(Change the path as appropriate for your outputs.)

Programmatically, we use the Butler API to retrieve the file as a python object. First, launch python.

python

from lsst.daf.butler import Butler

butler = Butler("/home/centos/butler.yaml", collection="aws-lsstXX-MyName") # Update for your collection name

exp = butler.get("postISRCCD", {"visit":903334, "detector":22, "instrument":"HSC"}) |

Getting a "LookupError"? Are you SURE you changed your collection name in the above Python snippet?

Getting a "FileNotFoundError" on the butler.yaml file? Do "aws s3 cp s3://lsst-demo-pegasus/input/butler.yaml ~/butler.yaml" to download a yaml config file or change the path to the location you store the file.

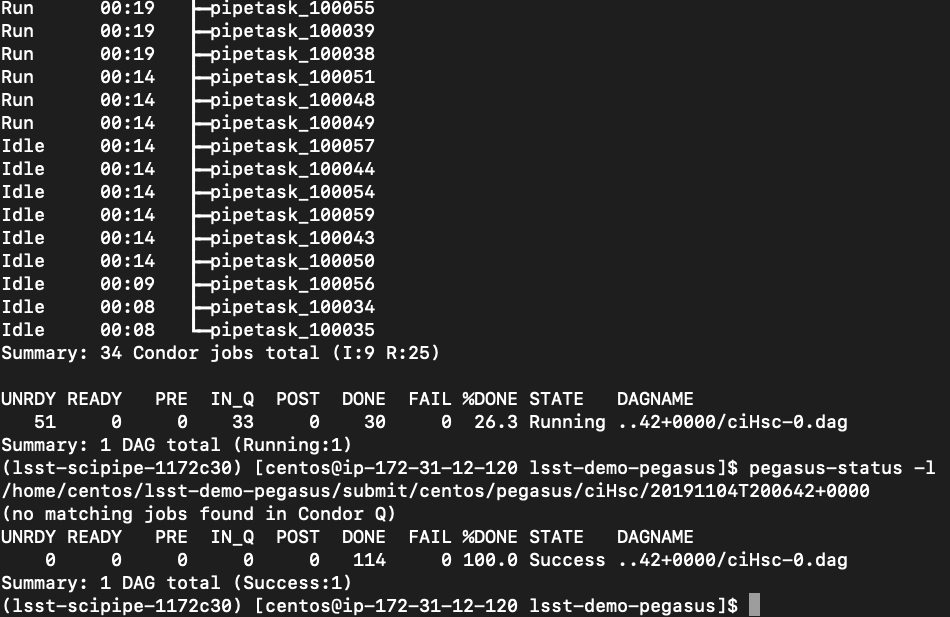

Goal: scale the example processing command to 100 jobs using HTCondor and Pegasus.

In this example, we use Pegasus* as the workflow manager to submit and monitor the jobs. Pegasus runs workflows on top of HTCondor. The job dependency is represented as a directed acyclic graph (DAG) and HTCondor controls the processes.

A Pegasus workflow of 100 jobs has been pre-made for you. We have 33 input raw images. For each input image, 3 sequential tasks are done. We also need one initialization job in the beginning. The dependency graph is as below:

Follow the steps to submit your workflow.

Download files and inputs required to run the workflow

aws s3 cp --recursive s3://lsst-demo-pegasus lsst-demo-pegasus |

Navigate to the folder (feel free to explore the files in it):

cd lsst-demo-pegasus |

In the file wf.dax you can find the job specifications and the graph of operations that will be executed. You will find various environmental settings followed by a section that contains commands. You might notice that the commands look similar to the pipetask command you ran above, wrapped in additional XML syntax.

The run_peg.sh file is the workflow submit command for Pegasus. Feel free to look at it. The command of interest is the pegasus-plan command, the rest of the file deals with ensuring that the output collection name differs from any used previously.

If it isn't already, make the file executable, and run it.

chmod +x run_peg.sh ./run_peg.sh |

The pegasus-status commands outputted among the other log outputs should look something like:

pegasus-status -l /home/centos/lsst-demo-pegasus/submit/centos/pegasus/ciHsc/20191031T202640+0000 |

but your timestamp part of the string is different. Running this command allows you to inspect the state of the workflow. Also note the name of your output collection printed at the end of the logs, if you want to navigate to S3 and see the files for yourself.

The workflow will remain idle until Annex workers become available. In the next step we will add workers on which the jobs will be executed.

Goal: add some Annex workers to the HTCondor worker pool.

You might have noted previously that the AMI you had launched was named "demo_master". On this AMI HTCondor is already running and is configured in a "master" mode. In this mode this instance serves only as a scheduler and resource manager and is not able to do any processing.

HTCondor master instructs "worker" nodes to do the actual processing. There are 2 types of workers that condor_annex can launch: "on-demand" and "spot fleet" workers. The difference between the two is the type of "rental agreement" made. We will launch "on-demand" instances.

On demand instances are yours until you decide to terminate them.

Spot instances are rented from AWS like on demand instances, but at any point AWS can request them back. A 2 minute warning is issued, after which the instance is terminated and assigned to someone else. Because of this spot fleet instances can be much cheaper, but are also more precarious to work on.

To add on-demand instances, run

condor_annex -count 6 \

-aws-on-demand-instance-type m5.xlarge \

-aws-on-demand-ami-id ami-04dee9fa7194ef55e \

-aws-on-demand-security-group-ids sg-058badbfff072b4ae \

-idle 1 \

-annex-name $OUT

|

The command breakdown follows:

-count sets the number of instances you want,

-aws-on-demand-instance-type sets the type, note that changing this might affect your performance,

-aws-on-demand-ami targets the AMI you want to use, note that the AMI used for the workers is different than the one used for the master,

-idle sets the amount of time the instance will run in idle mode, after this time has elapsed and the instance did not receive any new work it will automatically terminate.

-annex-name The name of your Annex cluster, it doesn't have to be unique but it will make interpreting the status commands much easier. By default we just named them after your first output collection.

We kept the number of instances used relatively low in order for you to be able to run status and execution status commands. try any of the following:

Sit back, and see your jobs run. You can repeat the "pegasus-status" command to see how the workflow is going.

Eventually all of your pipetask jobs finish, given the worker availability.

You are welcome to go to the folder /home/centos/lsst-demo-pegasus/output where you will find the pipeline logs of all jobs.

Congratulations on finishing this tutorial and thank you for your patience following along!

When you are done, please help us by terminating your instance. On-demand instances continue running until termination, and so do the $$ charges.

Go to the EC2 Dashboard. (If you navigated away to S3, you will have to get back here from the Services menu.) Select your instance, click "Action" and go to "Instance State", and select "Terminate".

Confirm "Yes, Terminate" if you are sure.

Please only do so if you are sure this is your instance! If you are not sure, just leave it running. We can do the cleanup afterwards.

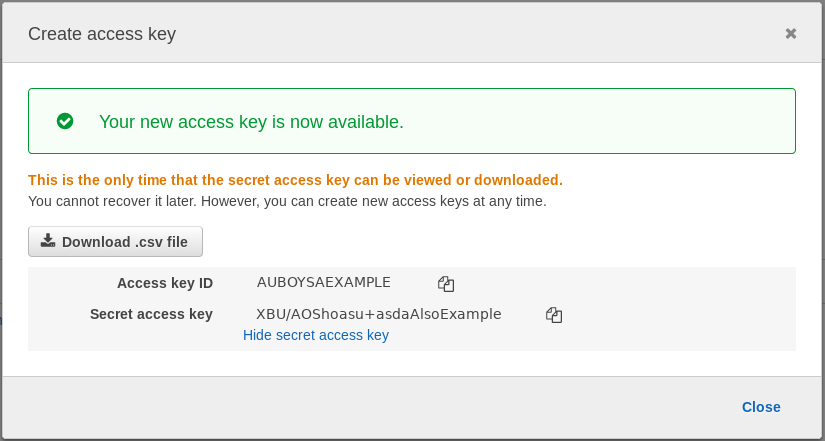

For this tutorial you will be given a paper with your access key and secret access key. If you lose it, you may create a new key as below.

* Disclaimer: The LSST project does not make commitment to any particular workflow manager as its production tools at this moment. The usage here is only meant as an example and is Hsin-Fang Chiang's own choice for this demo session.